This is often because it's difficult to decide and articulate exactly what research you need to do and why. Rigid methods of working don't help here, and can undermine the real need for discovery research. Not every problem needs user interviews, field studies and attitudinal surveys before even getting to a prototype so having those baked into your process for every learning solution is likely to mean people either simply say no, or go somewhere else for a solution.

So, having a clear way to explain what research methods you want to use and why is a way to overcome that. You can make it clear to stakeholders what you need and why, engage them in the process, and overcome the barriers to doing effective discovery.

In this article, we'll explore a simple framework that helps you articulate what learning design research you need to do and why.

But first...learning design is poker, not chess

Before deciding what discovery methods you need, it's important to understand that with whatever solution you create, there will be an element of risk. Whether people are coming to you with a business problem to solve, a module or training day in mind, an asset to fit into their learning experience, a single pane in a storyboard – they are coming and to achieve the best possible outcome. But there is always a chance, even if it's very small, that your solution won't achieve that outcome.

The problems we solve in learning are highly contextual – so much so that we will never have enough data to make a 100% risk-free solution. In her book 'Thinking in Bets', Annie Duke explains that life is like poker, not chess. In chess, you have all the information you need to make a decision on the board in front of you and if you lose, it's down to you making the wrong decision. In poker, there's an element of chance – even if you make the right decision, it might not have the outcome you expect. The same analogy is true of the problems we solve and decisions we make most if the time in our work as learning professionals.

So, what does that mean for the discovery process? Your work and solutions will be a series of bets. Your duty as a learning professional is to have enough data to make the best bet possible on achieving the right outcome, and reduce the risk of that bet to a level you and the business are comfortable with.

A simple matrix for choosing discovery methods

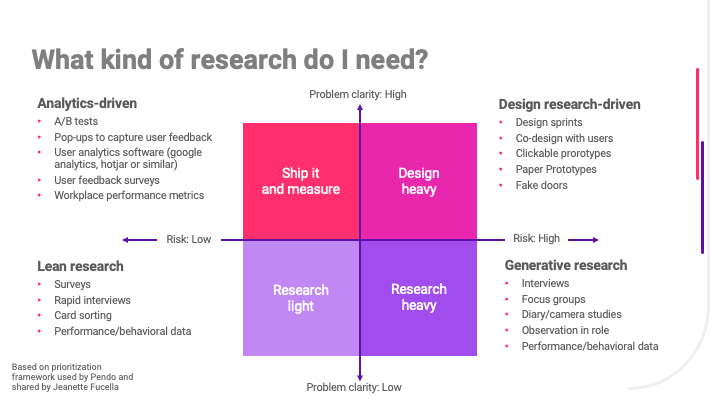

I came across this framework while working with Robin Zaragoza at The Product Refinery and she in turn had discovered it through Jeanette Fuccella Director of UX research at Pendo. In its original form, it solves a similar challenge that product managers often have in articulating what kind of discovery they need to do, and with a few simple adaptations works well for the problems we solve in learning.

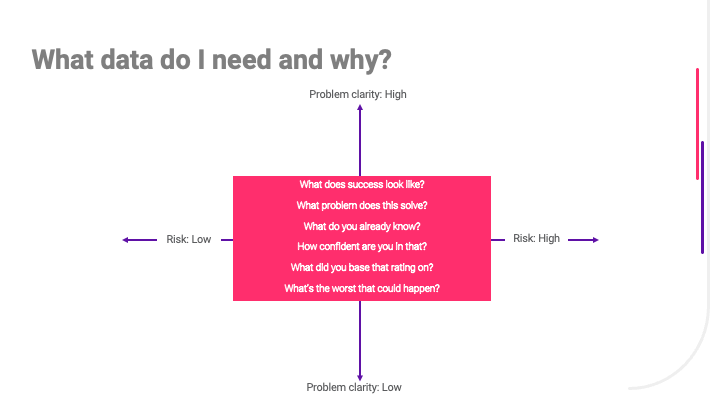

To start with, you need to be clear on how well the problem is already understood (problem clarity – y axis) and the risks involved in shipping a solution to the problem (risk –x axis). There are some simple questions you can ask to find this out.

Problem clarity - Y Axis

Problem clarity is about how well you understand the problem already. High clarity means you know the problem is one worth solving and enough about the audience and context to make a bet on the best solution. Low clarity means the opposite. There are some simple questions you can ask to understand from the person coming to you with the problem where they are at on this axis, what data is already available and how reliable that data is.

Question: What does success look like? At this stage, you're often looking for the problem behind the problem. So, when you ask a stakeholder about this and they say 'Success is... a really great e-learning module about x', you can work outwards to understand the underlying challenge using questions like 'OK, and what will people do differently after this module?' then 'If they do that, what effect will it it have?'

TIP: Some stakeholders will be able to give you the business impact and context for the problem they are solving, others need a little coaching and questioning to get there. Be patient and use different questioning approaches to get the answers you need if the information isn't there immediately. If they just don't know, you could also try asking 'Who might know the answer to that question?'

Question: What problem does this solve? It might seem like this question should come first but my experience of having this conversation countless times over the years is that people come with an idea of the thing they want and can clam up if you cut them off and ask them about the underlying problem straight up. It's better to start with what they want and work back to understand the need. If you've used the methods above to get to the underlying challenge that people are looking to solve, you can play back your understanding here 'It sounds like x is the challenge'. If not, now's the time to ask about the underlying problem.

Question: What do you know already about this problem? Once you've helped them articulate the problem, you can understand what level of problem clarity you have by asking this question. Keep your questions open at this stake and allow them to give you a dump of everything they know.

Question: On a scale of 1-99 (nothing is 100%) how confident are you in that observation? This question is great for helping identify assumptions and gaps in the data without suggesting that the stakeholder is wrong. It's a good way to ease into challenging some assumptions and the validity of those assumptions.

Question: What did you base that confidence rating on? This question is a less confrontational way of asking "What data do you have?". I've found it's a good way to find out about the 'messy data' that people have to hand like conversations and workplace observations, as well as the more robust traditional sources like customer feedback.

Risk - X Axis

Where the problem sits on the x axis will likely be a blend of several different types of risk, including: repetitional risk, regulatory / legal risk, and financial risk due to a. the cost of the solution and b. the opportunity cost of not solving the problem. You can usually flush out the most important of these using a variation on the question below.

Question: What's the worst that could happen? This is a powerful question for two reasons. Firstly, it's open enough that people tend to answer with a long stream of consciousness that contains all the possible risks of shipping a solution to the problem. Secondly, after talking through the worst-case scenario, you often realise that there's actually relatively little risk associated with the problem and you can move it to one of the low-effort parts of the matrix.

The quadrants

Now you know roughly where you are on the matrix, let's explore the quadrants and what types of research sit in each.

Low risk, high problem clarity. When you already understand the problem and it's low risk. Ship your solution and tweak it based on what you learn. Some examples here might be feature or layout updates to your digital learning systems or existing learning content, small internal workshops or job-aids. To learn about whether your solution is working as expected, you could look at:

High risk, high problem clarity. If you're pretty sure you can make a good bet on a solution, but there's still a risk of getting it wrong, you're best off de-risking by testing with a prototype or similar first. Some methods you could use here are:

Low risk, low problem clarity. If you're not clear on the problem but it's low risk, you can use lightweight research methods to formulate your bet at a solution. These research methods focus on quickly getting a feel for the problem without sinking too much time into detailed research.

High risk, low problem clarity. With a high-risk problem, you need plenty of data to be confident in creating a solution. This quadrant focuses on methods that generate rich data from which you can start to understand the context of your problem. Some useful methods here are:

in summary...

Getting the time and resource to do effective discovery for learning projects can be a challenge but by articulating the need behind of it in terms of risk and problem clarity, it can be easier to win stakeholders over and get what you need. Understanding how confident you are in the solution and the worst that could happen were you to be wrong can also help you avoid procrastination in shipping your solution and de-risk it where appropriate.

The research methods outlined in this article are a starting point you can use in your own learning design toolkit.

Is this article interesting?